‘Universally unable to classify non-binary genders’

University of Colorado-Boulder researchers have a discovered a new danger from artificial intelligence: It’s not always woke.

In the November edition of the Proceedings of the ACM on Human Computer Interaction, computer scientists Morgan Klaus Scheuerman, Jacob Paul and Jed Brubaker evaluated “gender classification in commercial facial analysis and image labeling services.”

They found that systems designed to recognize people by their face also recognize them by their sex – as in, the immutable characteristics that make them male or female, reflected in the very construction of their faces.

This is problematic for individuals who identify as the opposite sex, nonbinary or other amorphous identities not rooted in biology.

The researchers did a system analysis of 10 services and then ran “a custom dataset of diverse genders using self-labeled Instagram images” through half of them:

We found that FA services performed consistently worse on transgender individuals and were universally unable to classify non-binary genders. In contrast, image labeling often presented multiple gendered concepts. We also found that user perceptions about gender performance and identity contradict the way gender performance is encoded into the computer vision infrastructure.

The findings alarmed Quartz, an online publication that targets “a new kind of business leader,” because they showed that major AI-based facial recognition from Amazon, IBM and Microsoft “habitually misidentified non-cisgender people:

The researchers gathered 2,450 images of faces from Instagram, searching under the hashtags #woman, #man, #transwoman, #transman, #agenderqueer, and #nonbinary. They eliminated instances in which multiple individuals were in the photo, or where at least 75% of the person’s face wasn’t visible. The images were then divided by hashtag, amounting to 350 images in each group. Scientists then tested each group against the facial analysis tools of the four companies.

The systems were most accurate with cisgender men and women, who on average were accurately classified 98% of the time. Researchers found that trans men were wrongly categorized roughly 30% of the time.

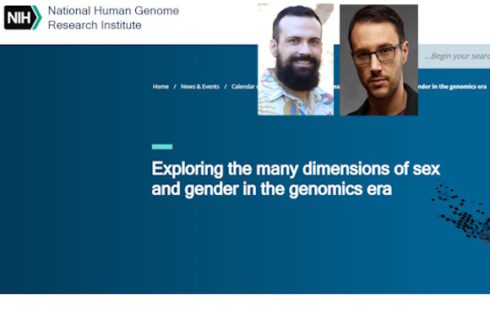

Part of the annoyance for researcher Scheuerman is personal: Half the systems misidentified the long-haired man, who identifies as gender nonconforming (left), as a woman. Unanswered: how they would know if he identified as a woman, an inner condition that cannot necessarily be determined visually.

Part of the annoyance for researcher Scheuerman is personal: Half the systems misidentified the long-haired man, who identifies as gender nonconforming (left), as a woman. Unanswered: how they would know if he identified as a woman, an inner condition that cannot necessarily be determined visually.

It’s not clear how companies could design facial recognition software that didn’t rely on shared characteristics commonly recognized as indicative of one sex or another. Some of these are not socially constructed: Women tend to have rounder, softer features than men, who have more angular features.

Read the study and Quartz report.

MORE: University rolls out new ‘ethical AI’ program

IMAGES: Nat Ax/Shutterstock, University of Colorado-Boulder

Please join the conversation about our stories on Facebook, Twitter, Instagram, Reddit, MeWe, Rumble, Gab, Minds and Gettr.